Overview

Google Kubernetes Engine (GKE) provides a managed environment for deploying, managing, and scaling your containerized applications using Google infrastructure. The Kubernetes Engine environment consists of multiple machines (specifically Compute Engine instances) grouped to form a container cluster. In this lab, you get hands-on practice with container creation and application deployment with GKE.

Cluster orchestration with Google Kubernetes Engine

Google Kubernetes Engine (GKE) clusters are powered by the Kubernetes open source cluster management system. Kubernetes provides the mechanisms through which you interact with your container cluster. You use Kubernetes commands and resources to deploy and manage your applications, perform administrative tasks, set policies, and monitor the health of your deployed workloads.

Kubernetes draws on the same design principles that run popular Google services and provides the same benefits: automatic management, monitoring and liveness probes for application containers, automatic scaling, rolling updates, and more. When you run your applications on a container cluster, you're using technology based on Google's 10+ years of experience with running production workloads in containers.

Kubernetes on Google Cloud

When you run a GKE cluster, you also gain the benefit of advanced cluster management features that Google Cloud provides. These include:

Load balancing for Compute Engine instances

Node pools to designate subsets of nodes within a cluster for additional flexibility

Automatic scaling of your cluster's node instance count

Automatic upgrades for your cluster's node software

Node auto-repair to maintain node health and availability

Logging and Monitoring with Cloud Monitoring for visibility into your cluster

Now that you have a basic understanding of Kubernetes, you will learn how to deploy a containerized application with GKE in less than 30 minutes. Follow the steps below to set up your lab environment.

Source:

This lab is from Qwiklabs.

Setup and requirements

Sign in to the Google Cloud Platform (GCP) Console

Task 1: Set a default compute zone

Your compute zone is an approximate regional location in which your clusters and their resources live. For example, us-central1-a is a zone in the us-central1 region.

To set your default compute zone to us-central1-a, start a new session in Cloud Shell, and run the following command:

gcloud config set compute/zone us-central1-a

Expected output:

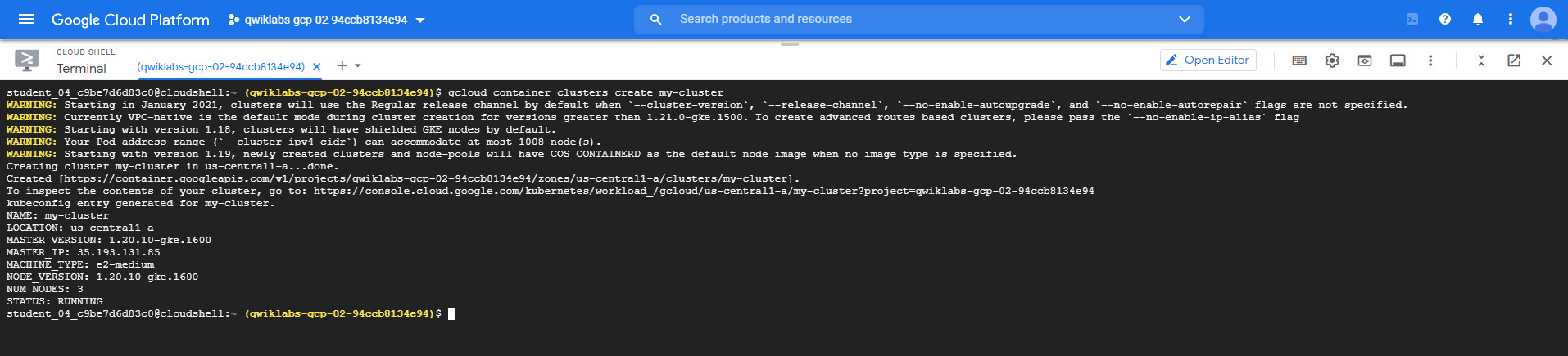

Task 2: Create a GKE cluster

A cluster consists of at least one cluster master machine and multiple worker machines called nodes. Nodes are Compute Engine virtual machine (VM) instances that run the Kubernetes processes necessary to make them part of the cluster.

Note: Cluster names must start with a letter and end with an alphanumeric, and cannot be longer than 40 characters.

1. To create a cluster, run the following command, replacing [CLUSTER-NAME] with the name you choose for the cluster (for example:my-cluster).

gcloud container clusters create [CLUSTER-NAME]

You can ignore any warnings in the output. It might take several minutes to finish creating the cluster.

Expected output:

Task 3: Get authentication credentials for the cluster

After creating your cluster, you need authentication credentials to interact with it.

1. To authenticate the cluster, run the following command, replacing [CLUSTER-NAME] with the name of your cluster:

gcloud container clusters get-credentials [CLUSTER-NAME]

Expected outpu:

Task 4: Deploy an application to the cluster

You can now deploy a containerized application to the cluster. For this lab, you'll run hello-app in your cluster.

GKE uses Kubernetes objects to create and manage your cluster's resources. Kubernetes provides the Deployment object for deploying stateless applications like web servers. Service objects define rules and load balancing for accessing your application from the internet.

1. To create a new Deployment hello-server from the hello-app container image, run the following kubectl create command:

kubectl create deployment hello-server --image=gcr.io/google-samples/hello-app:1.0

Expected output:

This Kubernetes command creates a Deployment object that represents hello-server. In this case, --image specifies a container image to deploy. The command pulls the example image from a Container Registry bucket. gcr.io/google-samples/hello-app:1.0 indicates the specific image version to pull. If a version is not specified, the latest version is used.

2.To create a Kubernetes Service, which is a Kubernetes resource that lets you expose your application to external traffic, run the following kubectl expose command:

kubectl expose deployment hello-server --type=LoadBalancer --port 8080

In this command:

--port specifies the port that the container exposes.

type="LoadBalancer" creates a Compute Engine load balancer for your container.

Expected output:

3. To inspect the hello-server Service, run kubectl get:

kubectl get service

Expected output:

Note: It might take a minute for an external IP address to be generated. Run the previous command again if the EXTERNAL-IP column status is pending.

4. To view the application from your web browser, open a new tab and enter the following address, replacing [EXTERNAL IP] with the EXTERNAL-IP for hello-server.

http://[EXTERNAL-IP]:8080

Expected output:

Task 5: Deleting the cluster

1. To delete the cluster, run the following command:

gcloud container clusters delete [CLUSTER-NAME]

2. When prompted, type Y to confirm.

Deleting the cluster can take a few minutes. For more information on deleted GKE clusters, view the documentation.

Congratulations!

You have just deployed a containerized application to Kubernetes Engine!

Reference:

1. Qwiklabs

2. Google Cloud Certification - Associate Cloud Engineer

3. Google Kubernetes Engine (GKE)

4. Google Kubernetes Engine documentation

5. Manage Containerized Apps with Kubernetes Engine | Google Cloud Labs

最初發表 / 最後更新: 2021.11.08 / 2021.11.08

0 comments:

張貼留言